Back in May I wrote two posts on student feedback in Actuarial Techniques, the course I have taught in Semester 2 since 2013. Since I have recently received feedback for the 2016 iteration of the course I thought it pertinent to review those posts and compare them with the new feedback.

And to be perfectly honest I am baffled.

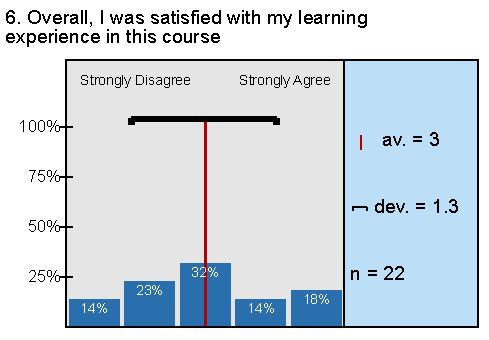

Shown below are the results for the “overall” question in 2015 for undergraduate students (78 enrolments and 22 respondents), which I discussed back in May.

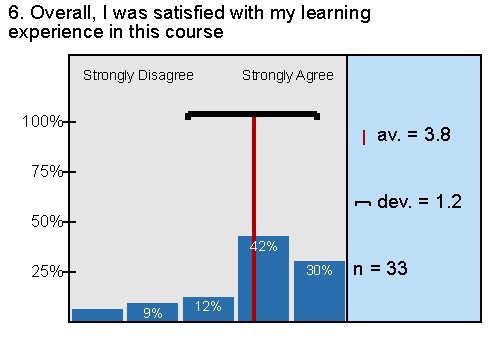

To fill out the discussion a little more, here are the results in 2015 for postgraduate students (67 enrolments and 34 respondents).

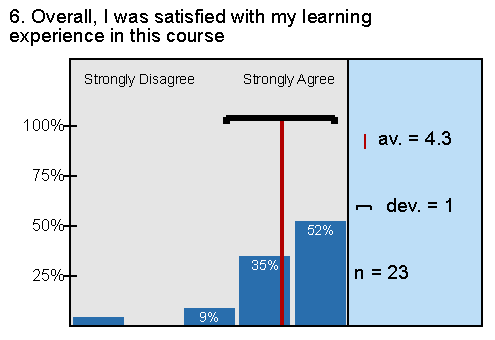

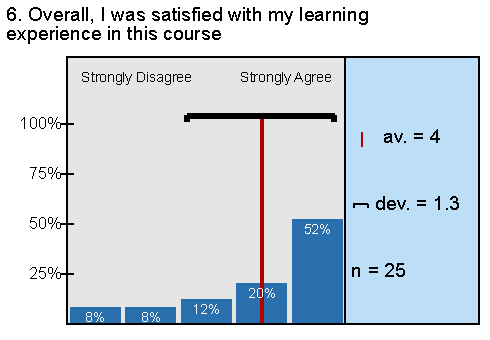

Now we move to 2016 results, which is where things get interesting. First is the undergraduate results (83 enrolments and 23 respondents) and second is the postgraduate results (80 enrolments and 26 respondents).

Now the postgraduate results are not massively different, just a few of the 4’s have become 5’s. Nice! But the undergraduate results have changed enormously, from an average of 3 (my worst ever score) to an average of 4.3 (close to my best ever score).

I have said before that Actuarial Techniques is a course that inspires passion in students (since it is so different from other actuarial courses), and has hence been a course that I have struggled to get really high feedback in the past because some of that passion converts to 1’s for students who really wrestle with the collaborative elements of the course. So I’m very surprised by these results. There were two key changes I made to the course in 2016 that may have influenced results, as follows:

- As mentioned in the class structure post I introduced short video lecture to each week’s material, which allows students to move ahead in the course if they have been allocated an assignment component by their group which we haven’t covered yet in class.

- I set up the groups for the second assignment to be separated by undergraduate and postgraduate status (i.e. Assignment 1 groups had a mix of undergraduate and postgraduate students, whilst Assignment 2 groups were not mixed). I did this because of some external issues that made it attractive to do this, also being interested what impact it would have on the course.

One other fact that I suspect has had some impact is that in 2015 there was some ambiguity in wording in one of the exam questions which cause some angst for a number of students.

Whilst I did get some very positive open ended feedback on the videos, I find it hard to believe that these changes had such a dramatic impact on the results. I’m wondering if there isn’t some group think going on here that impacts the results in some way or another. I suspect that students’ opinions of the course are heavily influenced by how much they enjoy working with the groups they are allocated to. Since groups are randomly allocated it is quite possible that pure luck could make groups more or less cohesive from cohort to cohort. Combine this with friends (who are likely to be in different groups) sharing their group work experience and there might be a recipe for group think in student feedback results. Who knows? I’m certainly not complaining! In any case I suspect I won’t be making any dramatic changes to the course in 2017 and thus it will be interesting to see what the feedback is for that iteration.

Now it would be remiss of me not to mention that the process of collecting and interpreting student feedback has many issues in of itself – just have a look at the response rates above (I doubt these are unbiased samples!). But this is a topic that has been much researched in of itself and perhaps something I will ponder in more detail in a future blog post.

Have a great Christmas and New Year everyone!

Adam Butt.